I'll be honest – I've been implementing AI chatbots for clients, but I felt like I was just plugging things together without really understanding what was happening under the hood. So I decided to build something from scratch: a word prediction engine, just like the autocomplete on your phone.

The result? A simple but powerful N-gram language model that actually taught me more about NLP in one weekend than months of reading documentation. And I'm sharing everything I learned.

Why Build Something "Old School"?

I know what you're thinking. "Why build an N-gram model when GPT-4 exists?" Fair question. But here's the thing – understanding the fundamentals makes you so much better at using modern tools.

It's like learning to drive a manual transmission before getting a Tesla. You don't need to know, but it makes you understand what's actually happening when things go wrong (or right).

Plus, N-gram models are still used everywhere:

- 📱 Your phone's keyboard suggestions

- 📧 Email subject line predictions

- 🔍 Google search autocomplete

- ✍️ Basic writing assistants

They're fast, lightweight, and surprisingly effective for specific use cases.

The "Aha!" Moment: It's Just Counting Words

Here's what blew my mind: at its core, word prediction is just sophisticated counting.

Let's say you train on this sentence: "The quick brown fox jumps over the lazy dog."

The model learns patterns like:

- After "the", you often see "quick" or "lazy"

- After "quick", you always see "brown"

- After "brown", you always see "fox"

That's it. You count how many times each word follows another word, then predict the most common one.

Of course, there's more nuance (bigrams vs trigrams, smoothing for unseen data, probability distributions), but the basic idea is beautifully simple.

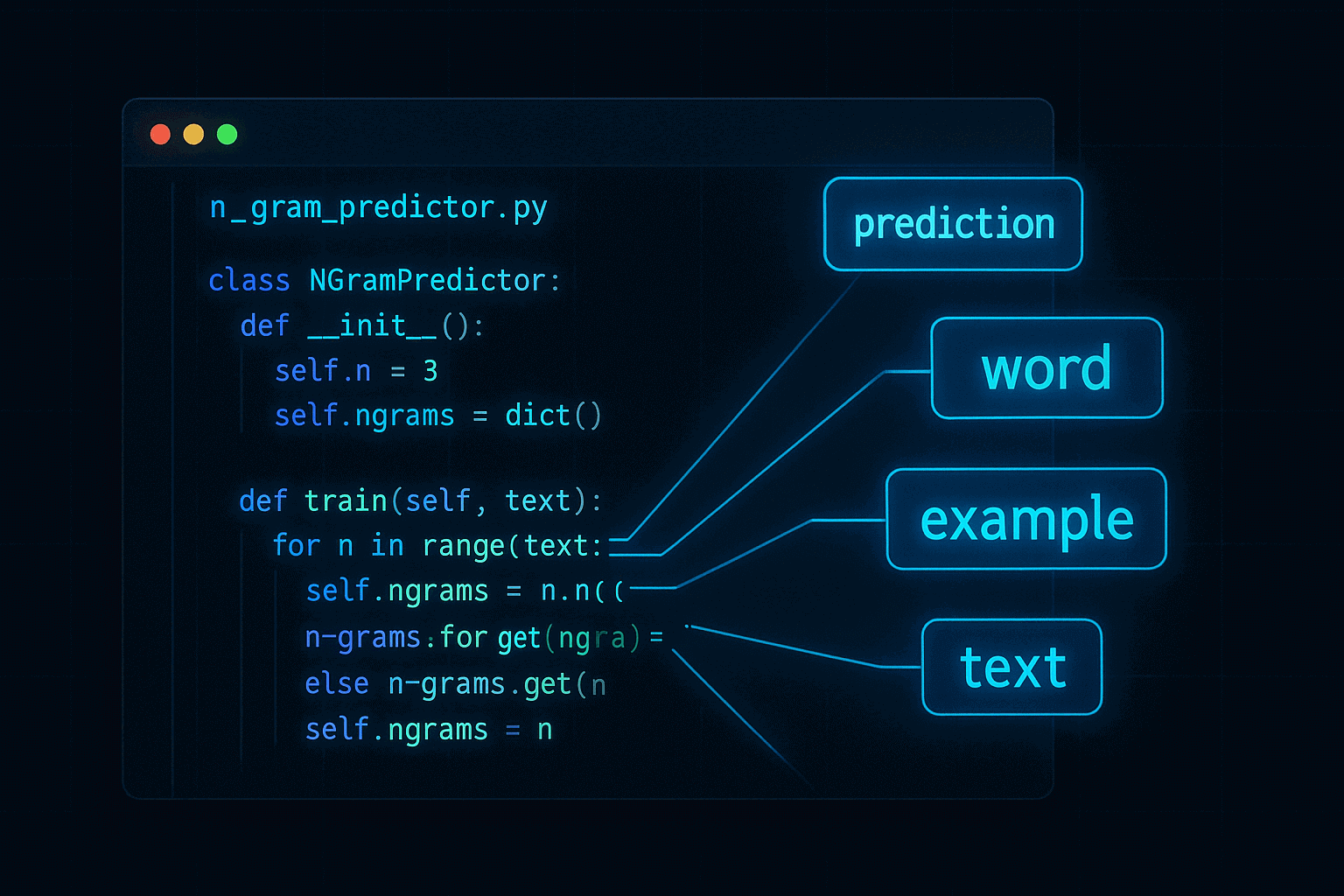

Building It: Easier Than I Expected

I wrote the whole thing in JavaScript (Node.js) because I wanted something I could easily share and that others could run without complicated setup.

The core components are:

1. Tokenization

Breaking text into words. Sounds simple, but you need to handle punctuation, capitalization, and special characters. I kept mine straightforward – lowercase everything and strip punctuation.

2. N-gram Creation

Deciding how much context to use. Bigrams (2 words) are fast but less accurate. Trigrams (3 words) capture more context but need more training data. I went with bigrams as a good starting point.

3. Frequency Counting

Using JavaScript Maps to store word sequences and their following words. This is where the "counting" happens.

4. Prediction Logic

Given a context, look up what words typically follow and return the most common one (or top N predictions with probabilities).

The entire predictor class is under 200 lines of code. No external ML libraries required.

Training It: The Fun Part

I trained my model on two datasets:

General English Corpus

Basic sentences covering common patterns. This gives the model a foundation of everyday language.

Domain-Specific Data

I scraped content from Monology.io (an AI chatbot platform) to see if the model could learn specialized vocabulary. I trained on this data 5 times more than the general corpus to create strong domain expertise.

The results were fascinating. After training, when I typed "AI chatbot", it predicted "builder" or "platform". When I typed "customer support", it predicted "automation" or "hub".

It actually learned the language of the domain!

What I Actually Learned

1. More Data ≠ Better Predictions (Always)

I thought throwing tons of text at it would make it smarter. Not really. Quality and relevance matter more than quantity. Training on 5x domain-specific data performed better than 50x generic text.

2. Context Window is Everything

Bigrams (1 word context) work okay. Trigrams (2 words context) work noticeably better. But 4-grams and beyond? Diminishing returns unless you have massive training data. There's always a sweet spot.

3. The "Cold Start" Problem is Real

What happens when someone types a phrase the model has never seen? It just... doesn't predict anything. Modern systems use smoothing techniques and backoff strategies. Understanding this problem helped me appreciate why modern LLMs are so impressive.

4. Speed vs Accuracy Trade-offs

My model makes predictions in milliseconds. GPT-4? Can take seconds. For autocomplete, speed wins. For complex queries, accuracy wins. There's no one-size-fits-all solution.

The Business Connection: Why This Matters

While building this, I kept thinking about real-world applications. Companies like Monology are building production-grade AI chatbots that need to:

- Understand user intent instantly

- Provide accurate, contextual responses

- Handle domain-specific terminology

- Route conversations intelligently

My little N-gram predictor can't do all that. But it taught me why those problems are hard and how modern solutions solve them.

For example, Monology uses intent classification to route customer inquiries vs job applications. That's essentially sophisticated pattern matching – not too different from what my predictor does, just way more advanced.

Understanding the basics made me appreciate the complexity of production systems. It's the difference between using a tool and understanding the tool.

Interactive Demo: Try It Yourself

When you run the predictor, you get an interactive terminal where you can test predictions in real-time:

Enter phrase: customer support

Best prediction: automation

Top 5:

1. automation (38.5%)

2. hub (28.9%)

3. queries (18.2%)

4. team (9.4%)

5. workflows (5.0%)

It's surprisingly satisfying to see it predict accurately based on patterns it learned!

The Code: Fully Open Source

I put everything on GitHub: github.com/monology-io/ngram-word-predictor

The repo includes:

- ✅ Complete predictor implementation

- ✅ Training datasets

- ✅ Interactive testing mode

- ✅ Model save/load functionality

- ✅ Detailed documentation

Clone it, modify it, learn from it. That's what it's there for.

Lessons for AI Implementation

Building this taught me things that directly apply to real AI projects:

1. Start Simple, Add Complexity When Needed

Don't reach for the most advanced solution first. Sometimes a simpler approach works better and is easier to debug.

2. Domain Knowledge > Model Complexity

Training on relevant, domain-specific data beats using a more complex model with generic data. Know your use case.

3. Understand What's Happening Inside

When things break (and they will), understanding fundamentals helps you fix them faster. Black boxes are fine until they're not.

4. Test in the Real World

Theory is great. But the interactive testing mode showed me real edge cases I never thought about. Build, test, iterate.

What's Next?

I'm planning to extend this project with:

- Smoothing techniques for unseen data

- Support for larger N-grams (4-grams, 5-grams)

- Comparison benchmarks against modern models

- Web interface for easier testing

But honestly, the real value was the learning process. I understand language models now in a way I didn't before.

Try Building One Yourself

If you're working with AI but feel like you're just plugging things together, I highly recommend building something from scratch. Pick any ML concept:

- Text classification

- Sentiment analysis

- Word prediction (like this)

- Recommendation system

Build the simplest possible version. You'll learn more than any tutorial can teach you.

Final Thoughts

We're in this interesting time where AI tools are incredibly powerful but also somewhat mysterious. Companies are implementing chatbots, automation, and AI assistants without really understanding what's under the hood.

That's fine for getting started. But if you want to really leverage AI effectively, understanding the fundamentals matters.

My word predictor isn't going to compete with GPT-4 (nor should it). But building it made me a better AI implementer. I understand why certain approaches work, when to use simple vs complex solutions, and how to debug when things go wrong.

Plus, it was genuinely fun. There's something deeply satisfying about watching a machine learn patterns from text and make accurate predictions.

Resources & Links

Project Repository: github.com/monology-io/ngram-word-predictor

Inspired by Monology.io: While my project is educational, if you need production-ready AI chatbots, check out Monology.io. They've built the real deal – no-code chatbot platform with multi-agent intelligence, visual workflow builder, and enterprise features. Everything I learned studying language models, they've implemented at scale.

Try their free trial if you're looking to automate customer support, lead generation, or sales workflows. No credit card required, takes 5 minutes to set up.

Have questions about the project or want to discuss NLP implementations? Feel free to reach out or open an issue on the GitHub repo. Always happy to chat about AI, language models, and practical applications!